As data centers continue to evolve to meet the demands of cloud computing, artificial intelligence, and big data analytics, the limitations of traditional electrical interconnects are becoming increasingly apparent. Optical interconnects offer a transformative solution, providing the bandwidth, low latency, and energy efficiency required for next-generation data center connectivity.

This comprehensive overview explores the latest advancements in optical interconnect technology, from photonics applications in future data center networks to high-performance optical interconnect solutions that are reshaping the landscape of data center connectivity as we know it.

Photonics Applications in Future Data Center Networks

The future of data center networking lies in the strategic integration of photonics across all levels of the network hierarchy. As bandwidth requirements continue to escalate—driven by AI, machine learning, and high-performance computing workloads—photonics offers the only viable path to scalable data center connectivity.

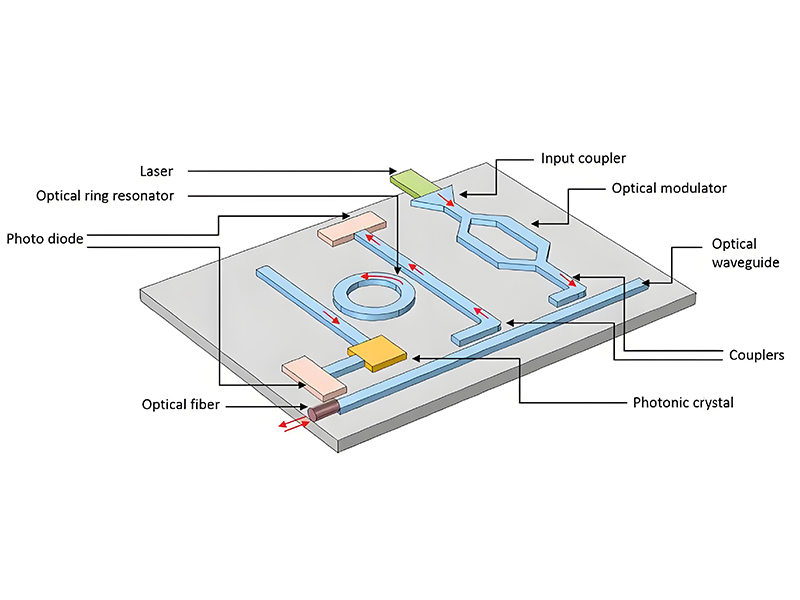

Integrated photonics, in particular, is revolutionizing how data centers handle information flow. By combining optical components directly onto silicon chips, we achieve unprecedented levels of miniaturization, energy efficiency, and cost reduction—critical factors for large-scale data center connectivity solutions.

One of the most promising applications is silicon photonics transceivers, which can deliver multi-terabit per second data rates while consuming significantly less power than traditional electrical interfaces. These devices are becoming essential building blocks for modern data center connectivity architectures, enabling the seamless flow of data between servers, storage systems, and network switches.

Beyond transceivers, photonics is enabling novel network topologies that were previously impractical. Optical backplanes, for example, allow for reconfigurable, high-bandwidth connections between components within a single chassis, greatly enhancing system flexibility and upgradability. This technology is particularly valuable in modular data center designs where rapid reconfiguration is essential.

Another transformative application is in rack-scale optics, where photonics is deployed at the server rack level to create high-bandwidth, low-latency connections between servers. This approach reduces the complexity of copper cabling, improves energy efficiency, and simplifies scaling as demands increase—all while maintaining robust data center connectivity.

Looking forward, 3D integrated photonics promises even greater advances by stacking photonic and electronic components, creating systems with unprecedented performance density. These innovations will be critical in addressing the ever-growing demands on data center connectivity as we move toward exascale computing and beyond.

Photonics Integration Hierarchy

Chip-Level Integration

Photonics directly integrated with processors and memory

Board-Level Integration

Optical interconnects on printed circuit boards

Rack-Level Integration

Optical backplanes and inter-rack connections

Data Center-Level Integration

Campus-wide optical networks for data center connectivity

Performance Comparison: Electrical vs. Photonic Interconnects

All-Optical Networks: A Systems Perspective

All-optical networks represent a paradigm shift in how data is transmitted and routed within data centers, eliminating the need for costly and energy-intensive optical-electrical-optical (OEO) conversions at intermediate nodes. This approach fundamentally transforms data center connectivity by enabling true end-to-end optical transmission.

From a systems perspective, all-optical networks must be viewed as integrated ecosystems where every component—from light sources and modulators to switches and detectors—works in harmony to maximize performance and reliability. This holistic approach is essential for creating scalable, efficient data center connectivity solutions.

One of the key advantages of all-optical networks is their ability to exploit wavelength division multiplexing (WDM), which allows multiple data streams to be transmitted simultaneously over a single fiber by using different wavelengths of light. This dramatically increases the capacity of data center connectivity infrastructure without requiring additional physical cabling.

Reconfigurable optical add-drop multiplexers (ROADMs) are another critical component, enabling dynamic wavelength management and routing. These devices allow network operators to remotely configure paths for different wavelengths, providing unprecedented flexibility in managing data center connectivity and quickly adapting to changing traffic patterns.

All-optical switching is perhaps the most transformative aspect of these networks, enabling data to be routed entirely in the optical domain. This eliminates the latency and energy consumption associated with OEO conversions, resulting in faster, more efficient data center connectivity. Recent advances in silicon photonics have made all-optical switches smaller, faster, and more reliable than ever before.

From a systems engineering standpoint, deploying all-optical networks requires careful consideration of signal integrity, noise management, and fault tolerance. Network monitoring and management systems must also evolve to handle the unique characteristics of optical signals, ensuring robust and reliable data center connectivity across the entire infrastructure.

As all-optical networks mature, we're seeing the emergence of software-defined optical networking (SDON), which brings the flexibility of software-defined networking (SDN) to the optical layer. This allows for dynamic, programmatic control of data center connectivity, enabling more efficient resource utilization and faster response to changing demands.

Key Components of All-Optical Networks

- Wavelength Division Multiplexers - Enable multiple data streams on a single fiber

- Optical Amplifiers - Boost signal strength without OEO conversion

- All-Optical Switches - Route signals entirely in optical domain

- Reconfigurable Add-Drop Multiplexers - Dynamic wavelength management

- Optical Monitoring Systems - Ensure signal integrity across the network

Systems-Level Benefits

By eliminating OEO conversions, all-optical networks reduce latency by up to 90% while decreasing power consumption by 70-80% compared to traditional electrical networks, dramatically improving data center connectivity efficiency.

All-Optical Network Architecture for Data Centers

Edge Access Layer

Optical interfaces at server and storage endpoints, providing direct optical data center connectivity from compute resources.

Aggregation Layer

Optical switches and WDM components that aggregate traffic and manage wavelength allocation for efficient data center connectivity.

Core Backbone

High-capacity all-optical mesh network providing interconnection between data center pods with reconfigurable data center connectivity paths.

Elastic Data Center Networks Based on High-Speed MIMO OFDM

The convergence of Multiple-Input Multiple-Output (MIMO) technology and Orthogonal Frequency-Division Multiplexing (OFDM) is creating a new paradigm for elastic data center networks capable of dynamically adapting to varying traffic demands. This innovative approach is redefining data center connectivity by providing unprecedented flexibility and efficiency.

MIMO technology, which uses multiple transmitters and receivers to exploit multipath propagation, significantly increases data throughput and link reliability. When combined with OFDM—where a high-data-rate signal is split into multiple lower-rate signals transmitted simultaneously over different frequency subcarriers—the result is a robust, high-capacity solution for modern data center connectivity requirements.

One of the key advantages of MIMO OFDM for data center networks is its elasticity. Unlike traditional fixed-bandwidth connections, MIMO OFDM systems can dynamically allocate bandwidth based on real-time demands, ensuring efficient utilization of network resources. This elasticity is critical for modern data centers with highly variable workloads, optimizing data center connectivity for both peak and off-peak periods.

High-speed MIMO OFDM systems also offer superior spectral efficiency, enabling them to transmit more data per unit of bandwidth than traditional modulation schemes. This is particularly valuable in data center environments where physical space and fiber resources are at a premium, maximizing the value of existing data center connectivity infrastructure.

From a practical implementation standpoint, these systems leverage advanced digital signal processing (DSP) to manage the complexity of MIMO OFDM modulation and demodulation. Modern DSP chips can handle the computational demands of these algorithms, enabling real-time adaptation to changing channel conditions and ensuring reliable data center connectivity even in challenging environments.

Another significant benefit is improved tolerance to signal impairments such as dispersion and crosstalk, which are common in high-density data center environments. MIMO OFDM's inherent robustness makes it an ideal solution for maintaining high-quality data center connectivity across increasingly complex network topologies.

As data rates continue to increase toward 400Gbps and beyond, MIMO OFDM is emerging as a critical enabling technology. Its ability to scale with higher frequencies and more complex modulation formats positions it as a long-term solution for data center connectivity needs, supporting the ever-growing demands of next-generation applications and services.

MIMO OFDM Advantages for Data Centers

Dynamic Bandwidth Allocation

Adapts to traffic demands in real-time

Spectral Efficiency

Maximizes data throughput per frequency unit

Link Reliability

Mitigates interference and signal degradation

Scalability

Supports future data rate increases

Implementation Considerations

MIMO OFDM systems require advanced DSP capabilities and careful calibration to achieve optimal performance in dense data center environments. When properly implemented, they provide a future-proof foundation for scalable data center connectivity.

Elastic Bandwidth Allocation in MIMO OFDM Networks

MIMO OFDM enables dynamic reallocation of bandwidth based on application demands, optimizing data center connectivity resources throughout the day.

Petabit Bufferless Optical Switching for Data Center Networks

As data center traffic continues to grow exponentially, the need for higher-capacity switching solutions has become paramount. Petabit bufferless optical switching represents a breakthrough technology that addresses this challenge, enabling unprecedented throughput while minimizing latency—critical factors for next-generation data center connectivity.

Traditional electronic packet switches face fundamental limitations in terms of both bandwidth and energy efficiency as they scale to petabit capacities. Bufferless optical switches overcome these limitations by leveraging the unique properties of light, enabling data to be routed without converting it to electrical signals or storing it in buffers—dramatically improving data center connectivity performance.

The bufferless nature of these switches eliminates the latency associated with buffer queuing, a significant bottleneck in traditional switching architectures. This makes them particularly well-suited for latency-sensitive applications such as high-frequency trading, real-time analytics, and AI model training—all of which depend on ultra-fast data center connectivity.

One of the most promising implementations of petabit bufferless optical switching is based on silicon photonics technology, which allows for the integration of thousands of optical components on a single chip. This enables the construction of compact, high-density switches capable of handling petabit-per-second traffic volumes while consuming significantly less power than equivalent electronic switches—reducing both operational costs and environmental impact of data center connectivity infrastructure.

These switches employ advanced arbitration algorithms to manage contention resolution without relying on buffers. By dynamically rerouting traffic and leveraging wavelength and space division multiplexing, they can efficiently handle traffic bursts and maintain high throughput even under heavy load conditions—ensuring reliable data center connectivity regardless of network congestion.

Another key advantage is the inherent parallelism of optical switching, which allows multiple data streams to be processed simultaneously. This parallelism is essential for achieving petabit-scale capacities and enables more efficient utilization of network resources, further enhancing the performance of data center connectivity systems.

As data centers continue to scale out and adopt more distributed architectures, the ability to deploy petabit-scale switches becomes increasingly important. These devices form the backbone of next-generation data center connectivity, enabling the seamless flow of data between tens of thousands of servers and storage systems while maintaining the low latency required for modern applications.

Looking forward, the continued development of petabit bufferless optical switching will be critical for supporting the exascale computing era, where data centers will need to handle hundreds of petabytes of data per day. These advances will ensure that data center connectivity remains a driver of innovation rather than a limiting factor in the evolution of computing infrastructure.

Petabit Switching Technology Comparison

| Technology | Max Capacity | Latency | Power Efficiency |

|---|---|---|---|

| Electronic Packet Switching | 100s of Tbps | 100s of ns | Low |

| Optical Circuit Switching | 10s of Tbps | 10s-100s of ms | Medium |

| Bufferless Optical Switching | 10s of Pbps | 10s of ns | High |

Key Innovations

- Silicon photonics integration for high-density ports

- Advanced contention resolution algorithms

- Combined wavelength and space switching

- Sub-nanosecond reconfiguration time

Scaling Properties of Bufferless Optical Switches

Optical Interconnects in High-Performance Data Centers

High-performance data centers (HPDCs) represent the cutting edge of computing infrastructure, powering the most demanding applications from scientific research to artificial intelligence. Within these facilities, optical interconnects have become indispensable, providing the high-bandwidth, low-latency data center connectivity required to support extreme computational workloads.

The deployment of optical interconnects in HPDCs spans multiple levels of the network hierarchy, from chip-to-chip and board-to-board connections within servers to rack-to-rack and row-to-row connections throughout the data center. This end-to-end optical approach ensures consistent performance and maximizes the efficiency of data center connectivity across the entire infrastructure.

One of the most critical applications is in high-performance computing (HPC) clusters, where thousands of servers work in parallel to solve complex problems. These environments require tightly coupled data center connectivity with extremely low latency to ensure efficient parallel processing. Optical interconnects provide the necessary performance, enabling the rapid exchange of data between nodes that's essential for HPC workloads.

AI and machine learning training clusters represent another key application area. These systems require massive amounts of data to be moved between GPUs and storage systems, often in highly irregular patterns. Optical interconnects with their high bandwidth and flexible routing capabilities are uniquely suited to handle these demanding data center connectivity requirements, accelerating training times and enabling more complex models.

In terms of implementation, high-performance data centers are increasingly adopting leaf-spine architectures with optical connections between switches. This design provides non-blocking connectivity between all servers, ensuring that data center connectivity doesn't become a bottleneck. Modern implementations use 100Gbps and 400Gbps optical transceivers, with 800Gbps and 1.6Tbps solutions already emerging for next-generation deployments.

Power consumption is a major concern in HPDCs, and optical interconnects offer significant advantages over electrical alternatives. By reducing the energy required for data transmission, optical technologies help lower overall power usage effectiveness (PUE) and reduce operational costs—while maintaining the high-performance data center connectivity that these facilities demand.

Another important consideration is cable management. High-density optical interconnects, including ribbon fibers and parallel optics, reduce the physical footprint of cabling systems, making it easier to manage the complex data center connectivity infrastructure in HPDCs. This not only improves airflow and cooling efficiency but also simplifies maintenance and upgrades.

Looking to the future, high-performance data centers will increasingly rely on coherent optical technologies, which can transmit data over longer distances with higher spectral efficiency. This will enable more flexible and scalable data center connectivity architectures, including disaggregated data centers where compute, storage, and memory resources are distributed across facilities but connected via high-speed optical links.

Ultimately, the continued advancement of optical interconnect technologies is essential for enabling the next generation of high-performance data centers. As computational demands continue to grow, these innovations will ensure that data center connectivity remains a foundation for progress rather than a limiting factor, supporting breakthroughs in science, engineering, and technology for years to come.

Optical Interconnect Deployment Layers

Chip-to-Chip

On-package optical links for inter-processor communication

Board-to-Board

Backplane optics connecting components within servers

Server-to-Switch

Front-panel optics for data center connectivity from servers to top-of-rack switches

Switch-to-Switch

High-bandwidth optical links between network switches

Data Center-to-Data Center

Long-haul optical connections for geographically distributed data center connectivity

Performance Benefits

In high-performance data centers, optical interconnects reduce latency by 50-80% compared to electrical alternatives while increasing bandwidth density by 10x or more, dramatically improving overall data center connectivity performance.

Optical Interconnect Requirements by Application

High-Performance Computing

- Ultra-low latency (<1µs)

- High bisection bandwidth

- Deterministic performance

- 3D torus/mesh topologies

AI/ML Training Clusters

- High aggregate bandwidth

- Adaptive routing capabilities

- GPU-optimized data center connectivity

- Support for collective operations

Big Data Analytics

- High throughput to storage

- Efficient multicast capabilities

- Scalable data center connectivity

- Quality of service guarantees

Ready to Transform Your Data Center Connectivity?

Discover how advanced optical interconnect solutions can revolutionize your data center connectivity, delivering unprecedented performance, efficiency, and scalability.